Risk taxonomy¶

A context-driven approach to identifying ethical concerns¶

Contributors:

- John Hall (Atos SE)

- Djalel Benbouzid (Volkswagen Group)

- Georg Merz (Deutsche Bahn)

The potential risks associated with AI driven systems are self-evidently dependent upon the overall nature of their use-case (A marketing recommendation bot does not expose its users to the same type or level of risk as an autonomous vehicle). Determining, understanding, and mitigating those risks can be highly subject to interpretation, leading to a lack of transparency and trust in the system.

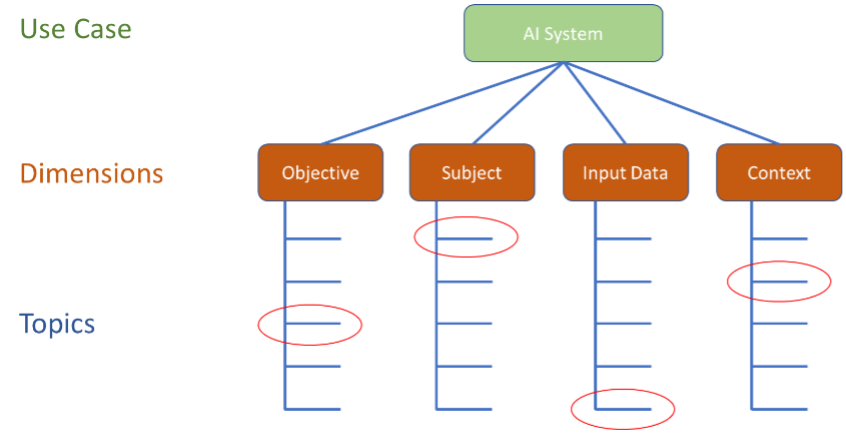

We propose a generic 4-dimensional taxonomy for describing the key characteristics of any AI use case. This taxonomy is used as a framework for an on-line tool which highlights the principal ethical concerns for a given system.

Future enhancements will look to align specific derived concerns with best practice mitigations for various stages in the development and deployment lifecycle.

The dimensions of AI system characteristics¶

In defining a generic taxonomy for describing the essential characteristics of an AI system, the following criteria were considered:

- Defined terms should be simple and unambiguous allowing users with minimal instruction to make the appropriate selection for a given use case.

- Wherever possible, terms should be mutually exclusive and collectively exhaustive. There may be some special cases where terms have to be prioritized to deal with non-exclusivity driven by external factors (e.g. specific industry regulations).

- The number of dimensions and terms used should be kept to a reasonable minimum to avoid unmanageable levels of complexity in assessing the spread of dimensional combinations.

Four dimensions for the taxonomy are proposed. These are:

- Objective. What is the primary operational objective of the AI application?

- Subject. What is the primary subject that is affected by the AI application?

- Input data. What is the nature of the primary input data that is used during the execution of the AI Algorithm?

- Context. In what operational context is the AI system being applied?

Within each of these dimensions a set of topics is proposed that is intended to cover the full range of anticipated use-cases.

The collective identification of the relevant topic for each dimension is intended to sufficiently define a use case such that its primary ethical concerns can be derived.

The dimensions and topics within them may be subject to refinement as new AI capabilities, characteristics, and concerns emerge.

Considering each of the dimensions in turn:

Objective¶

The proposed topic set within Objective is:

- Automate (automate actions based on a set of inputs) e.g. autonomous driving, NLP, or business process automation

- Recommend (next step recommendations for process or user actions) e.g. credit worthiness or personalized marketing

- Prediction / Identify (prediction of outcome, or identification of subject / event) e.g. weather forecasting, image recognition, or financial risk assessment.

For the Objective topics, there is a sense of hierarchy in relation to the degree to which AI outcomes are actioned. It is assumed that predicting an outcome is less “risky” than a clear recommendation for action, which in turn is less “risky” than a fully automated action. Automation of a process is, of course, likely to include prediction and identification, however, it is important to view this dimension as being the primary or ultimate objective of an AI use-case.

Subject¶

The proposed topic set within Subject is:

- People (human beings) e.g. facial recognition or employment vetting

- Processes (Industrial, business, or government process) e.g. high-frequency financial markets trading, benefits assessments, or manufacturing quality control

- Objects (physical objects) e.g. Autonomous vehicles, smart buildings, robots, or traffic signals.

- Animals (living organisms with a nervous system, other than humans) e.g. automated cow milking, or animal disease management.

- Environment (Natural physical environment) e.g. agricultural crops, atmosphere, oceans, or space.

For the Subject dimension, it is important to appropriately identify the primary subject that is affected by the AI application. For example, analyzing job applications may be considered to be a part of an HR process, but this process is directly and inextricably linked to the individual person who submitted the job application – the Subject is therefore “People”, implying heightened concerns over fairness and privacy. This case contrasts with that of an Autonomous Vehicle, for which the Subject is “Objects” as it is the vehicle which is being directly controlled, even though there may be knock-on implications for people as passengers, pedestrians or other road users.

Input Data¶

The dimension of Input Data is used primarily as a determinant of the level of sensitivity of data that is used for AI algorithm execution. There are 3 topics used:

- Private Data (Data that has defined individual or corporate ownership) e.g. personal banking information, or data under the scope of GDPR

- Public Data (Data that is considered to be held within the public domain. It relates to stored data sets rather than more transient Ambient Data) e.g. Internet, social media, public records

- Ambient Data (Data, information or signals that have no clearly defined ownership) e.g. city noise, air temperature, voice commands

For a given use case, it may be that there is some overlap i.e. Ambient Data may be collected and become Public or even Private data. It is important to identify the nature of the Input Data at time of use.

Context¶

The dimension of Context is the most diverse in terms of topic areas.

- Critical Infrastructure / Defense (Use cases relating to infrastructure or processes whose failure has significant and widespread implications) e.g. Utilities networks, air traffic control, border protection.

- Smart Infrastructure (Use cases relating to objects or infrastructure capable of fully or semi-automated intelligent operation) e.g. Smart homes, autonomous driving, automated factories.

- Personal Health (Use cases relating to health and wellbeing of individuals or groups) e.g. Medical image analysis, symptom diagnosis, connected health solutions.

- Finance (Use cases relating to individual, corporate or national finance) e.g. Banking, insurance, investment.

- Government / Society (Use cases relating to official national processes) e.g.

- Education / Media

- Employment / Leisure

- Retail

- Social Media

Classifying use cases¶

The topics within the four defined dimensions can be collectively used to describe any given AI use case. For example: an intelligent automated rail network control system would be defined as:

Automate; Objects; Private data; Critical Infrastructure / Defense

The logic behind this classification is as follows:

The control of the network is intelligently automated through actions like points activation and signal notifications. The use case may involve dynamic prediction of train movements, but ultimately the objective is to automate the control of trains on the network.

The target is the objects (trains, signals, points etc.) Although the primary function of a train may be to carry people, they are not the entity that is the focus of the AI control.

Input Data is Private Data because, although the AI algorithms may use ambient data such as local weather conditions, the main source of insight will be from rail operator data – network capacities, maintenance schedules, timetables etc.

Finally, the context is Critical Infrastructure / Defense – the rail network is considered to be part of a country’s critical national infrastructure. There are significant implications of disruptions, failures, and accidents.

The combination of these topics helps identify the main ethical concerns and hence the required mitigation actions. A change in the perspective of any of the four dimensions can be expected to have an influence on the related ethical concerns. For example, if the rail network control application was focused on predicting network performance, safety might be less of a concern than it would be in the case of full network automation.

A tool for assessing any given use case¶

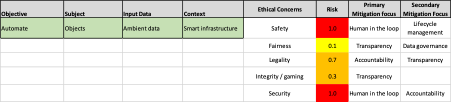

The Ethical Concern Finder tool takes each combination of topics within the four defined dimensions and (based on collective insights and perceived best practices) assigns a relative risk rating to a set of possible concerns.

An example of what this looks like is shown below. The specific use case given is that of an autonomous vehicle. The greatest derived concerns relate to Safety and Security (they get a maximum rating of 1.0). Legality gets a rating of 0.7 – it could be argued that having an autonomous car take an emergency left turn, even though that is not usually allowed, could be acceptable if it avoids endangering the safety of someone. Concerns over system “gaming” and “fairness” are somewhat secondary for this use case.

There are a number of stages in the process of evaluating the ethical concerns and their relative risk quotients:

-

Elimination of “invalid” topic combinations. There are a number of scenarios that could be described within the 4 dimensions; however, they would not reflect practical use-cases (at least at this point in time). This might include the Automation of People, Animals or the Environment; dealing with Objects or Animals in the context of Finance; or considering Private Data in the context of Animals. Such intersections are marked as “invalid” to reduce the number of topic combinations that need to be assessed.

-

Identification of areas of concern. For each dimension, the primary ethical concerns are identified in accordance with relative risk rating. For example, there may be no concerns over Privacy when dealing with a target of Animals; however, when dealing with a target of people, privacy is of the utmost concern. The relative degree of concern can be expressed as a real number between 0 and 1; where 0 reflects no perceived concerns and 1 reflects a major concern.

-

Calculation of weighted risk level. The respective concern weightings for the characteristics of a defined use case are compounded to propose a risk level between 0 and 1. Each use case is likely to have more than ethical concern, each with their own relative risk rating. These are meant to serve as a guideline in terms of applying the appropriate mitigation actions during the development and operation of an AI application.

-

Recommended focus for concern mitigation. The final step in the Ethical Concern Finder tool is to propose areas of focus for dealing with perceived risks and to point to possible best practices. These recommendations are not intended to be a magic formula for eliminating ethical risks in AI applications, but they should help avoid blind spots at key points within the AI lifecycle.

As experience and understanding of use cases grows, it will be possible to refine the assumptions that underpin the Ethical Concern Finder tool.

Last update: 2022.01.06, v0.1